Empower your team with the tools to evaluate, analyze, and enhance your LLM applications. Develop and confidently deploy LLM models into production.

Get Demo

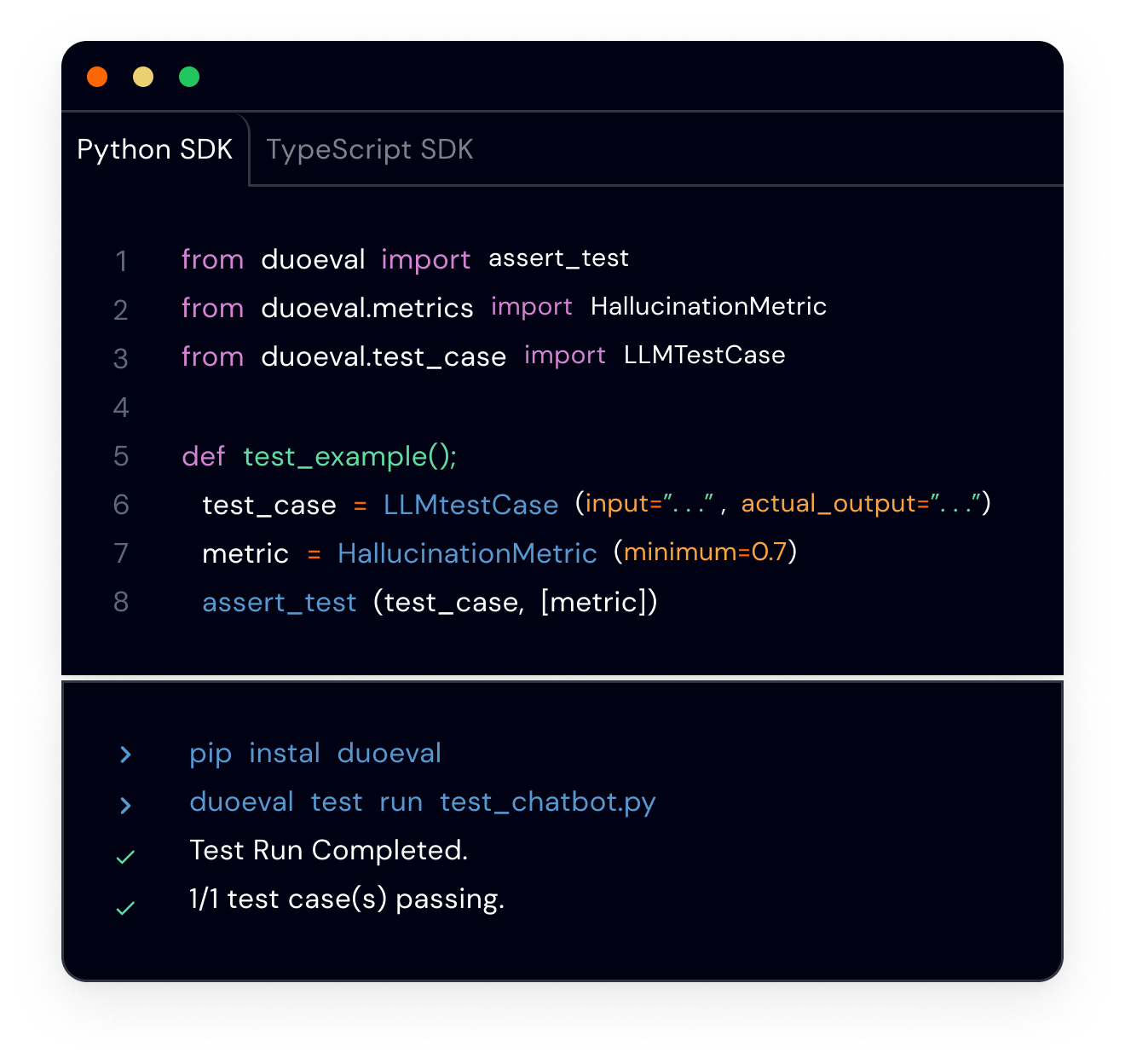

Automate the evaluation and benchmarking of LLMs by leveraging them as impartial judges, scoring responses against grounded truth data.

Access a range of out-of-the-box popular metrics frameworks like RAGAs and ARES.

Customize your metrics and evaluators to align with your specific use cases and objectives.

Create model questions and answers (ground truth) using your provided dataset.

Utilize synthetic data as the foundation for evaluation or to enhance your existing dataset.

Alternatively, input your own model questions and answers for evaluation.

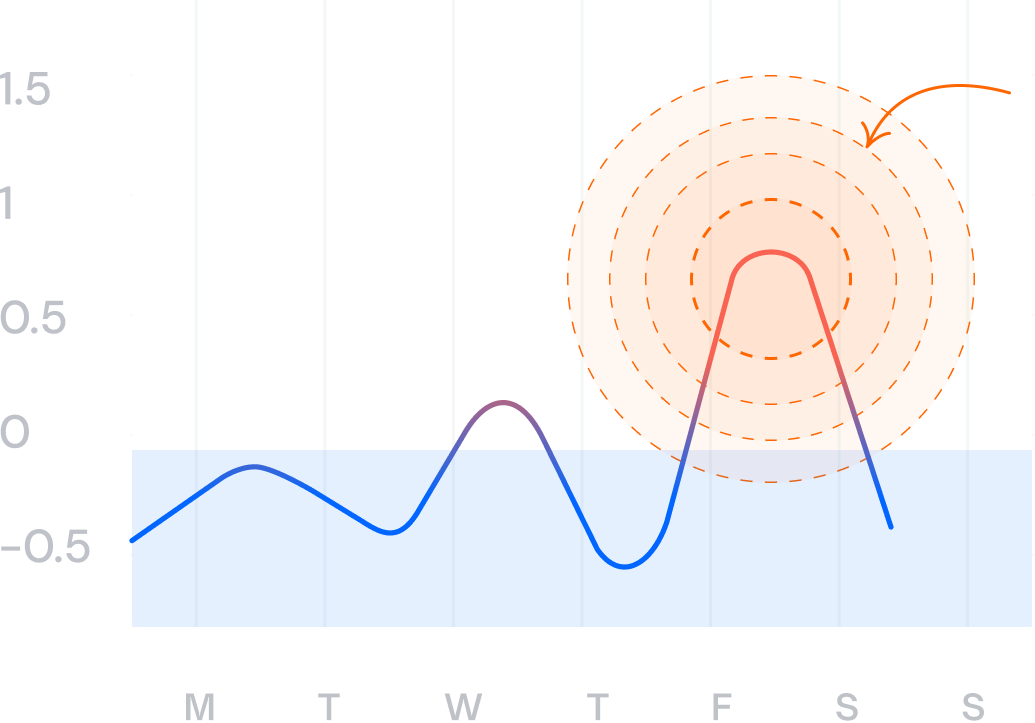

Analyze test results with ease.

Trace and debug your configuration and LLM models to pinpoint areas for optimization and improvement.

Verify the impact of your changes and model updates on response quality.

Designed and Developed by a team of serial entrepreneurs with previous

successful exits

in the b2b and saas space. We believe AI can definitely transform businesses and enterprises, changing the way we work.

Get in touch with DuoEval today for a demo account